Bivariate Probit

2017-05-04

Built using Zelig version 5.1.0.90000

Bivariate Probit Regression for Two Dichotomous Dependent Variables with bprobit from ZeligChoice.

Use the bivariate probit regression model if you have two binary dependent variables \((Y_1, Y_2)\), and wish to model them jointly as a function of some explanatory variables. Each pair of dependent variables \((Y_{i1}, Y_{i2})\) has four potential outcomes, \((Y_{i1}=1, Y_{i2}=1)\), \((Y_{i1}=1, Y_{i2}=0)\), \((Y_{i1}=0, Y_{i2}=1)\), and \((Y_{i1}=0, Y_{i2}=0)\). The joint probability for each of these four outcomes is modeled with three systematic components: the marginal Pr \((Y_{i1} = 1)\) and Pr \((Y_{i2} = 1)\), and the correlation parameter \(\rho\) for the two marginal distributions. Each of these systematic components may be modeled as functions of (possibly different) sets of explanatory variables.

Syntax

First load packages:

library(zeligverse)With reference classes:

With the Zelig 4 compatibility wrappers:

Input Values

In every bivariate probit specification, there are three equations which correspond to each dependent variable (\(Y_1\), \(Y_2\)), and the correlation parameter \(\rho\). Since the correlation parameter does not correspond to one of the dependent variables, the model estimates \(\rho\) as a constant by default. Hence, only two formulas (for \(\mu_1\) and \(\mu_2\)) are required. If the explanatory variables for \(\mu_1\) and \(\mu_2\) are the same and effects are estimated separately for each parameter, you may use the following short hand:

fml <- list(cbind(Y1,Y2) ~ X1 + X2)which has the same meaning as:

fml <- list(mu1 = Y1 ~ X1 + X2, + mu2 = Y2 ~ X1 + X2)Anticipated feature, not currently enabled:

You may use the function tag() to constrain variables across equations. The tag() function takes a variable and a label for the effect parameter. Below, the constrained effect of x3 in both equations is called the age parameter:

fml <- list(mu1 = y1 ~ x1 + tag(x3, “age”), + mu2 = y2 ~ x2 +

tag(x3, “age”))You may also constrain different variables across different equations to have the same effect.

Examples

Basic Example [basic.bp]

Load the data and estimate the model:

data(sanction)z.out1 <- zelig(cbind(import, export) ~ coop + cost + target,

model = "bprobit", data = sanction)

summary(z.out1)## Model:

##

## Call:

## z5$zelig(formula = cbind(import, export) ~ coop + cost + target,

## data = sanction)

##

##

## Pearson residuals:

## Min 1Q Median 3Q Max

## probit(mu1) -2.164 -0.5307 -0.2526 0.4621 2.168

## probit(mu2) -5.115 -0.5019 0.0590 0.6432 2.948

## rhobit(rho) -2.172 -0.4182 0.1030 0.2874 4.296

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept):1 -1.71806 0.61883 -2.776 0.00550

## (Intercept):2 -1.86625 0.60169 -3.102 0.00192

## (Intercept):3 -0.38106 0.49828 -0.765 0.44443

## coop:1 0.16794 0.20102 0.835 0.40347

## coop:2 -0.02836 0.19841 -0.143 0.88634

## cost:1 1.46870 0.36948 3.975 7.04e-05

## cost:2 1.53177 0.37747 4.058 4.95e-05

## target:1 -0.75222 0.27500 -2.735 0.00623

## target:2 -0.28211 0.25531 -1.105 0.26918

##

## Number of linear predictors: 3

##

## Names of linear predictors: probit(mu1), probit(mu2), rhobit(rho)

##

## Log-likelihood: -72.0482 on 225 degrees of freedom

##

## Number of iterations: 5

## Next step: Use 'setx' methodBy default, zelig() estimates two effect parameters for each explanatory variable in addition to the correlation coefficient; this formulation is parametrically independent (estimating unconstrained effects for each explanatory variable), but stochastically dependent because the models share a correlation parameter. Generate baseline values for the explanatory variables (with cost set to 1, net gain to sender) and alternative values (with cost set to 4, major loss to sender):

Simulate fitted values and first differences:

s.out1 <- sim(z.out1, x = x.low, x1 = x.high)summary(s.out1)##

## sim x :

## -----

## ev

## mean sd 50% 2.5% 97.5%

## Pr(Y1=0, Y2=0) 0.764414729 0.081132438 0.777455707 5.840602e-01 0.89361936

## Pr(Y1=0, Y2=1) 0.165326310 0.071943019 0.157151653 5.831875e-02 0.34341225

## Pr(Y1=1, Y2=0) 0.062336183 0.043134762 0.050826223 1.036291e-02 0.17478426

## Pr(Y1=1, Y2=1) 0.007922778 0.009676822 0.004710452 9.152771e-05 0.03701662

## pv

## 0 1

## (Y1=0, Y2=0) 0.212 0.788

## (Y1=0, Y2=1) 0.859 0.141

## (Y1=1, Y2=0) 0.937 0.063

## (Y1=1, Y2=1) 0.992 0.008

##

## sim x1 :

## -----

## ev

## mean sd 50% 2.5%

## Pr(Y1=0, Y2=0) 1.609406e-05 0.0001060622 1.699806e-08 1.568586e-11

## Pr(Y1=0, Y2=1) 1.477314e-02 0.0360107035 2.171428e-03 5.111826e-06

## Pr(Y1=1, Y2=0) 3.348953e-03 0.0139152116 1.875177e-04 4.215531e-08

## Pr(Y1=1, Y2=1) 9.818618e-01 0.0379522763 9.956782e-01 8.845988e-01

## 97.5%

## Pr(Y1=0, Y2=0) 0.0001209118

## Pr(Y1=0, Y2=1) 0.1069029540

## Pr(Y1=1, Y2=0) 0.0248877996

## Pr(Y1=1, Y2=1) 0.9999419810

## pv

## 0 1

## (Y1=0, Y2=0) 1.000 0.000

## (Y1=0, Y2=1) 0.989 0.011

## (Y1=1, Y2=0) 0.999 0.001

## (Y1=1, Y2=1) 0.012 0.988

## fd

## mean sd 50% 2.5% 97.5%

## Pr(Y1=0, Y2=0) -0.76439863 0.08114943 -0.77745517 -0.8936194 -0.584056187

## Pr(Y1=0, Y2=1) -0.15055317 0.08233919 -0.14431943 -0.3309139 -0.021439946

## Pr(Y1=1, Y2=0) -0.05898723 0.04643810 -0.04941808 -0.1714881 -0.002845955

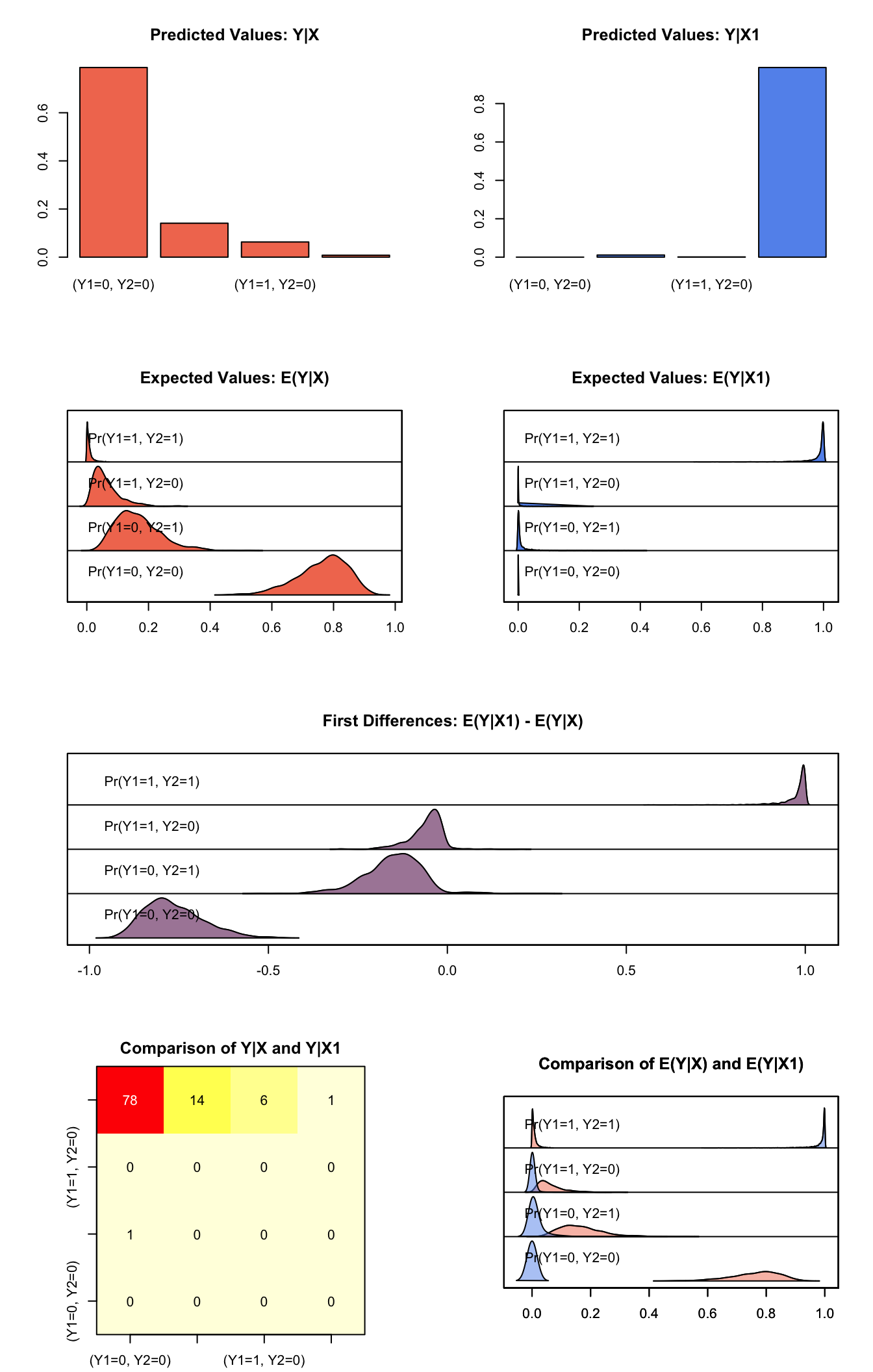

## Pr(Y1=1, Y2=1) 0.97393904 0.04223666 0.98879594 0.8695511 0.999339537plot(s.out1)

Graphs of Quantities of Interest for Bivariate Probit

Model

For each observation, define two binary dependent variables, \(Y_1\) and \(Y_2\), each of which take the value of either 0 or 1 (in the following, we suppress the observation index \(i\)). We model the joint outcome \((Y_1\), \(Y_2)\) using two marginal probabilities for each dependent variable, and the correlation parameter, which describes how the two dependent variables are related.

- The stochastic component is described by two latent (unobserved) continuous variables which follow the bivariate Normal distribution:

\[ \begin{aligned} \left ( \begin{array}{c} Y_1^* \\ Y_2^* \end{array} \right ) &\sim & N_2 \left \{ \left ( \begin{array}{c} \mu_1 \\ \mu_2 \end{array} \right ), \left( \begin{array}{cc} 1 & \rho \\ \rho & 1 \end{array} \right) \right\}, \end{aligned} \]

where \(\mu_j\) is a mean for \(Y_j^*\) and \(\rho\) is a scalar correlation parameter. The following observation mechanism links the observed dependent variables, \(Y_j\), with these latent variables

\[ \begin{aligned} Y_j & = & \left \{ \begin{array}{cc} 1 & {\rm if} \; Y_j^* \ge 0, \\ 0 & {\rm otherwise.} \end{array} \right. \end{aligned} \]

- The systemic components for each observation are

\[ \begin{aligned} \mu_j & = & x_{j} \beta_j \quad {\rm for} \quad j=1,2, \\ \rho & = & \frac{\exp(x_3 \beta_3) - 1}{\exp(x_3 \beta_3) + 1}. \end{aligned} \]

Quantities of Interest

For \(n\) simulations, expected values form an \(n \times 4\) matrix.

- The expected values (

qi$ev) for the binomial probit model are the predicted joint probabilities. Simulations of \(\beta_1\), \(\beta_2\), and \(\beta_3\) (drawn form their sampling distributions) are substituted into the systematic components, to find simulations of the predicted joint probabilities \(\pi_{rs}=\Pr(Y_1=r, Y_2=s)\):

\[ \begin{aligned} \pi_{11} &= \Pr(Y_1^* \geq 0 , Y_2^* \geq 0) &= \int_0^{\infty} \int_0^{\infty} \phi_2 (\mu_1, \mu_2, \rho) \, dY_2^*\, dY_1^* \\ \pi_{10} &= \Pr(Y_1^* \geq 0 , Y_2^* < 0) &= \int_0^{\infty} \int_{-\infty}^{0} \phi_2 (\mu_1, \mu_2, \rho) \, dY_2^*\, dY_1^*\\ \pi_{01} &= \Pr(Y_1^* < 0 , Y_2^* \geq 0) &= \int_{-\infty}^{0} \int_0^{\infty} \phi_2 (\mu_1, \mu_2, \rho) \, dY_2^*\, dY_1^*\\ \pi_{11} &= \Pr(Y_1^* < 0 , Y_2^* < 0) &= \int_{-\infty}^{0} \int_{-\infty}^{0} \phi_2 (\mu_1, \mu_2, \rho) \, dY_2^*\, dY_1^*\\ \end{aligned} \]

where \(r\) and \(s\) may take a value of either 0 or 1, \(\phi_2\) is the bivariate Normal density.

The predicted values (

qi$pr) are draws from the multinomial distribution given the expected joint probabilities.The first difference (

qi$fd) in each of the predicted joint probabilities are given by

\[ \textrm{FD}_{rs} = \Pr(Y_1=r, Y_2=s \mid x_1)-\Pr(Y_1=r, Y_2=s \mid x). \]

- The risk ratio (qi$rr) for each of the predicted joint probabilities are given by

\[ \textrm{RR}_{rs} = \frac{\Pr(Y_1=r, Y_2=s \mid x_1)}{\Pr(Y_1=r, Y_2=s \mid x)}. \]

- In conditional prediction models, the average expected treatment effect (att.ev) for the treatment group is

\[ \frac{1}{\sum_{i=1}^n t_i}\sum_{i:t_i=1}^n \left\{ Y_{ij}(t_i=1) - E[Y_{ij}(t_i=0)] \right\} \textrm{ for } j = 1,2, \]

where \(t_i\) is a binary explanatory variable defining the treatment (\(t_i=1\)) and control (\(t_i=0\)) groups. Variation in the simulations are due to uncertainty in simulating \(E[Y_{ij}(t_i=0)]\), the counterfactual expected value of \(Y_{ij}\) for observations in the treatment group, under the assumption that everything stays the same except that the treatment indicator is switched to \(t_i=0\).

- In conditional prediction models, the average predicted treatment effect (att.pr) for the treatment group is

\[ \frac{1}{\sum_{i=1}^n t_i}\sum_{i:t_i=1}^n \left\{ Y_{ij}(t_i=1) - \widehat{Y_{ij}(t_i=0)}\right\} \textrm{ for } j = 1,2, \]

where \(t_i\) is a binary explanatory variable defining the treatment (\(t_i=1\)) and control (\(t_i=0\)) groups. Variation in the simulations are due to uncertainty in simulating \(\widehat{Y_{ij}(t_i=0)}\), the counterfactual predicted value of \(Y_{ij}\) for observations in the treatment group, under the assumption that everything stays the same except that the treatment indicator is switched to \(t_i=0\).

Output Values

The output of each Zelig command contains useful information which you may view. For example, if you run z.out <- zelig(y ~ x, model = bprobit, data), then you may examine the available information in z.out by using names(z.out), see the coefficients by using z.out$coefficients, and obtain a default summary of information through summary(z.out). Other elements available through the $ operator are listed below.

From the

zelig()output object z.out, you may extract:coefficients: the named vector of coefficients.

fitted.values: an \(n \times 4\) matrix of the in-sample fitted values.

predictors: an \(n \times 3\) matrix of the linear predictors \(x_j \beta_j\).

residuals: an \(n \times 3\) matrix of the residuals.

df.residual: the residual degrees of freedom.

df.total: the total degrees of freedom.

rss: the residual sum of squares.

y: an \(n \times 2\) matrix of the dependent variables.

zelig.data: the input data frame if

save.data = TRUE.From

summary(z.out), you may extract:coef3: a table of the coefficients with their associated standard errors and \(t\)-statistics.cov.unscaled: the variance-covariance matrix.pearson.resid: an \(n \times 3\) matrix of the Pearson residuals.

See also

The bivariate probit function is part of the VGAM package by Thomas Yee. In addition, advanced users may wish to refer to help(vglm) in the VGAM library.

Yee TW (2015). Vector Generalized Linear and Additive Models: With an Implementation in R. Springer, New York, USA.

Yee TW and Wild CJ (1996). “Vector Generalized Additive Models.” Journal of Royal Statistical Society, Series B, 58 (3), pp. 481-493.

Yee TW (2010). “The VGAM Package for Categorical Data Analysis.” Journal of Statistical Software, 32 (10), pp. 1-34. <URL: http://www.jstatsoft.org/v32/i10/>.

Yee TW and Hadi AF (2014). “Row-column interaction models, with an R implementation.” Computational Statistics, 29 (6), pp. 1427-1445.

Yee TW (2017). VGAM: Vector Generalized Linear and Additive Models. R package version 1.0-3, <URL: https://CRAN.R-project.org/package=VGAM>.

Yee TW (2013). “Two-parameter reduced-rank vector generalized linear models.” Computational Statistics and Data Analysis. <URL: http://ees.elsevier.com/csda>.

Yee TW, Stoklosa J and Huggins RM (2015). “The VGAM Package for Capture-Recapture Data Using the Conditional Likelihood.” Journal of Statistical Software, 65 (5), pp. 1-33. <URL: http://www.jstatsoft.org/v65/i05/>.